Unbiased Review of Panorad’s Pathfinder

Mike Larson, MD, PhD & Assistant Professor of Radiology, University of California – Davis

* I currently have no financial interest in Panorad, but certainly hope to in the future because I find this radiologist-developed software very useful.

No one from Panorad has seen or directly influenced the writing of this review.

The opinions expressed are my own and not my employer’s.

Neither Dr. Covington nor The Radiology Review have any financial interest in Panorad. This article comprises an unsolicited review submitted by Dr. Larson.

As a resident physician, I filled out a form so I could have “referring doctor” access to images from the private radiology group that was competing with the university system where I was a trainee. One of the surgeons was very impressed when I pulled up this outside imaging website, since there was an issue with the CD getting uploaded to our system on a patient that was transferred. I thought it was a minor thing, but the surgeon insisted that a radiologist that doesn’t “read on an island” is a great radiologist.

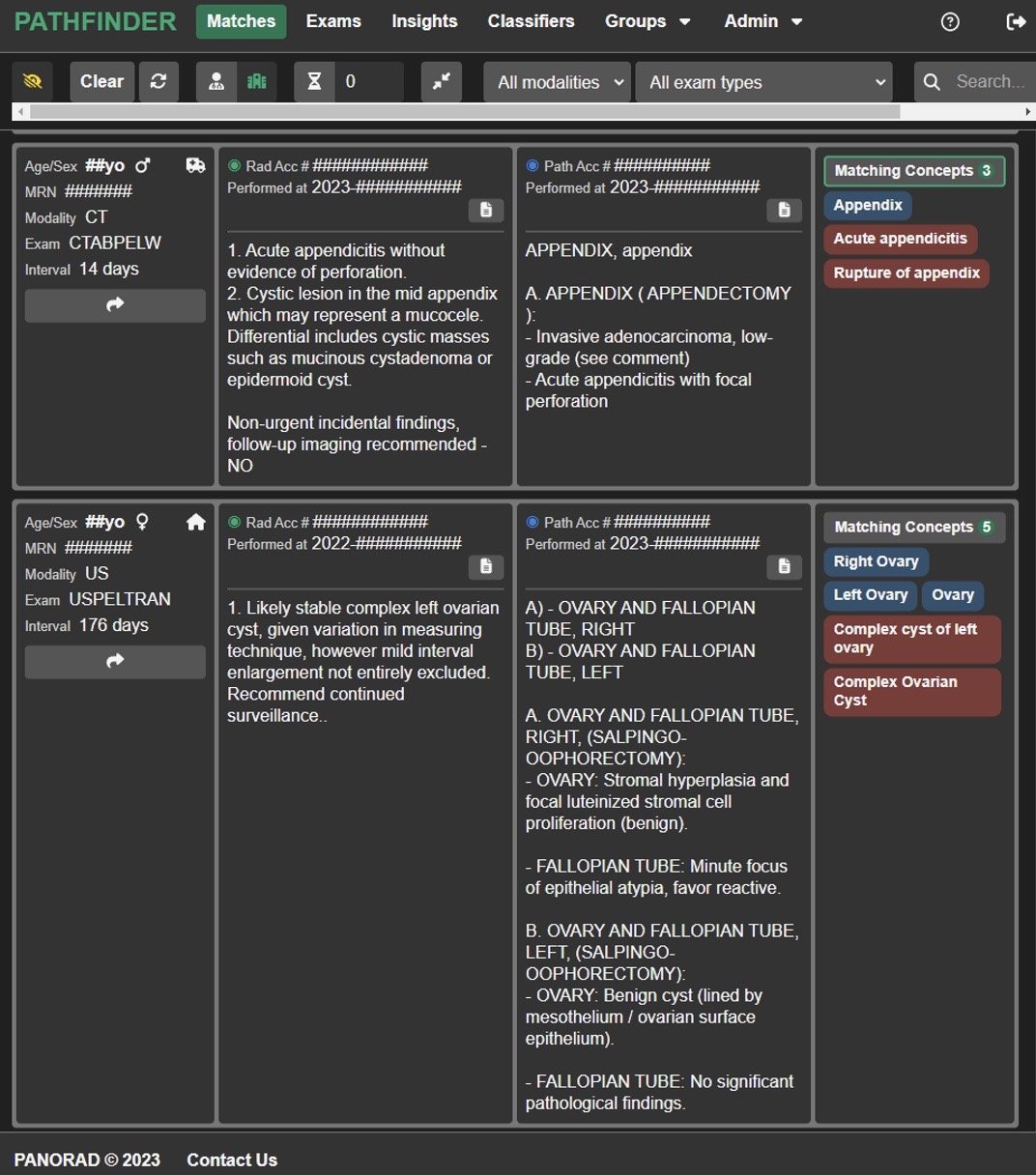

Pathfinder is a tool to bridge diagnostic radiology to clinical outcomes by linking radiology with pathology. In its most basic form, Pathfinder makes for very easy pathology follow-up of radiology reads. Pathfinder directly links patients with the same medical record number with “matching concepts” from radiology reads to pathology reports. Most radiologists I know put interesting or challenging cases in a follow-up folder in their PACS. While I still have a bloated follow-up folder in PACS that I haven’t gotten around to look at from before Pathfinder, I am caught up on everything after Pathfinder became available because Pathfinder’s Match function makes follow-up effortless.

Above is a screenshot of a few of my general institution matches, showing how that acute perforated appendicitis and cystic lesion my colleague called ended up being correct according to the final pathology report 14 days later; or how that ovarian cyst actually was benign 176 days after it was called on ultrasound as benign. While the former would have ended up in my “follow-up” folder before Pathfinder (was the cyst really a mucinous neoplasm, or just fluid from the perforated appendicitis?), evaluation of the later example—where we were expecting benign and it ended up getting examined under the microscope—can also provide useful insights. This tool has helped me with my diagnostic confidence as a newer attending.

While the matching concepts aren’t perfect, Pathfinder has made rad-path correlation remarkably easy. (For example, mediastinal lymph node biopsies show up in my matches even if I have only read a CT abdomen/pelvis, but “Lymph nodes” and “Lower chest” are in my CT abdomen/pelvis template and so pathology reports of mediastinal lymph nodes naturally match.) Even with the less-useful matches, I still can learn from other radiologists’ reports.

Another aspect of Pathfinder that appropriately boosts (or destroys) confidence is the “Insights” portion. Insights shows you your exams you have reported on (either as a trainee or finalized as an attending), your diagnostic stats, and a Reporting and Data System-type section.

Due to a recent case where there was concordance between CT and nuclear medicine biliary imaging suggesting no cholecystitis, but discrepancy with ultrasound and MRI where there was concern for cholecystitis, I was curious about the diagnostic accuracy at our institution of the nuclear medicine exam. I queried the NM biliary scan for pathology instances of “cholecystitis” and set the cut-off at 45 days, so that any case without a pathology report mentioning “cholecystitis” by that time would be considered “Path -.” The accuracy wasn’t perfect, but was on par with published values [1].

Another example of the usefulness of Insights is the Reporting and Data System-type inputs and matches to clinically-relevant cancer, so one can see how well she/he is performing relative to published standards and peers.

Here is a screenshot of the PI-RADS tab of the Insights portion of Pathfinder, showing published ranges (green bar), group ranges (blue bar) and an individual’s diagnostic performance (yellow bar). In addition to the easy-to-see performance range, this also shows that this certain radiologist uses PI-RADS 3 with higher frequency than the group and thus has a wider spread. While I have found this useful for professional improvement, I’m sure marketers could find this section potentially useful with the growing expectation of transparency. Again, this isn’t perfect since matches are missing from a few biopsies that were interpreted outside this healthcare system, but this tool makes for tremendous ease of publication (whether for research or marketing publicity).

And Pathfinder isn’t only for diagnostic radiologists. Pathfinder has made image-guided biopsy follow-up remarkably straightforward.

Above is a screenshot showing the matches of image-guided biopsies with the resultant pathology reports. While getting notified of an “insufficient” or “non-diagnostic” biopsy sample can be disappointing, it is not disheartening knowing from Pathfinder that the vast majority of my biopsies end up yielding a diagnosis.

Newer features of Pathfinder that I haven’t begun to explore include natural language processing (NLP) Classifiers to train the computer to automatically apply labels so that I can more easily find those non-diagnostic biopsies (for example) or other diagnoses, curating or sharing cases for peer learning, continuous quality improvement (CQI), or quality assurance (QA) management.

While this isn’t a ground-breaking solution to the growing imbalance between radiology human capital and radiology expectations that Dr Covington discussed in a prior article [2], it definitely tips the scales towards humans. You can learn more about it at https://panorad.io/ if interested.

But why follow-up on our cases, even when Pathfinder makes it effortless? We don’t get reimbursed for following up, and the ABR has recently eliminated self-assessment CME [3], so there aren’t clear extrinsic motivators to do so. I’m far from the perfect radiologist, but I follow up since I’m always trying to improve, and it goes with the timeless principle the surgeon reinforced to me as a resident that a radiologists that doesn’t read on an island is a truly helpful radiologist.

References:

1. Kalimi R, Gecelter GR, Caplin D, et al. Diagnosis of acute cholecystitis: sensitivity of sonography, cholescintigraphy, and combined sonography-cholescintigraphy. J Am Coll Surg. 2001;193(6):609-613. doi:10.1016/s1072-7515(01)01092-4

2. Covington M. Is Imbalance in Medical Imaging Risking an Impossible Future for Radiologists? The Radiology Review. Published January 2, 2023. Accessed April 25, 2023. https://www.theradiologyreview.com/the-radiology-review-journal/medical-imaging-advances-dont-always-advance-us

3. Campbell R. ABR. The American Board of Radiology. Published March 29, 2022. Accessed April 25, 2023. https://www.theabr.org/blogs/abr-eliminating-sa-cme-requirement-for-ola-participants

Check out other articles from The Radiology Review Insider by clicking here.

Do you want to contribute to The Radiology Review Insider?

The article submission process is simple: email your proposed article to theradiologyreview@gmail.com. Include with your article your name and professional affiliation. Submission of all articles is appreciated but submission does not guarantee publication.

The Radiology Review may receive referral fees from purchases made with links on this page.